In my search for a good project that is relevant to the industry, I came across opencores.org which holds a vast collection of open-source digital blocks. Some have a SystemVerilog testbench, some even have a simple UVM-based testbench.

One of the most interesting topics I remember from my university degree is Computer Architecture, and especially SDRAM. I’ve searched for an SDRAM controller to build a UVM Testbench around and found one.

From the IP info section:

“This IP core is that of a small, simple SDRAM controller used to provide a 32-bit pipelined Wishbone interface to a 16-bit SDRAM chip.

When accessing open rows, reads and writes can be pipelined to achieve full SDRAM bus utilization, however switching between reads & writes takes a few cycles.

The row management strategy is to leave active rows open until a row needs to be closed for a periodic auto refresh or until that bank needs to open another row due to a read or write request.

This IP supports 4 open active rows (one per bank).”

So I went and did my reading…

Understanding SDRAM: A Deep Dive Through Testing

The first thing that struck me was how SDRAM is fundamentally organized. Unlike simpler memories, SDRAM is divided into banks (in our case, two banks according to the SDRAM_BANK_W parameter), and each bank contains rows and columns. It’s like having multiple spreadsheets (banks) with rows and columns in each one. This structure allows for some clever optimizations in how we access data.

Benefits of this bank structure:

- Bank Interleaving: Can keep one row open per bank, enabling faster access when alternating between banks

- Efficiency: While one bank is being precharged, can access another bank

- Reduced Latency: If data spans multiple rows, can have one row open in each bank

For example, with active rows in both banks:

Time Bank 0 Bank 1

1 Read Row A -

2 - Read Row B

3 Read Row A -

4 - Read Row BWhen you want to read or write data, you can’t just directly access it – you need to follow a specific dance of commands:

- First, you need to “activate” a row in a bank (like opening a specific spreadsheet and selecting a row)

- Then you can perform reads or writes to different columns in that row

- Finally, you need to “precharge” the bank before accessing a different row

Through my testbench implementation, I’ve seen why this matters. The SDRAM_MHZ parameter in our controller is set to 50MHz, and there are strict timing requirements between these operations. For instance, there’s a parameter called tRCD (Row to Column Delay) which enforces a minimum time between activating a row and being able to read/write to it. In our case, it’s set to 18ns – that’s how long we need to wait after selecting a row before we can access its data!

What’s really clever is how SDRAMs handle burst operations. When writing the driver for our testbench, I implemented support for different burst lengths (1, 2, 4, or 8 words). Once you set up the initial address, the SDRAM automatically provides the subsequent data words without needing new addresses. It’s like telling it “start at cell B5 and give me the next 4 cells” instead of asking for B5, B6, B7, and B8 individually.

The most challenging part of my testing has been dealing with refresh operations. SDRAMs need periodic refreshing to maintain their data (hence the “Dynamic” in SDRAM). Our controller automatically handles this with a refresh counter (SDRAM_REFRESH_CYCLES), but I needed to ensure our test sequences didn’t conflict with refresh operations. As I am one with Green Hands, some will say, I think that It’s actually pretty similar to having to water plants regularly – if you don’t refresh the memory cells, they “dry out” and lose their data!

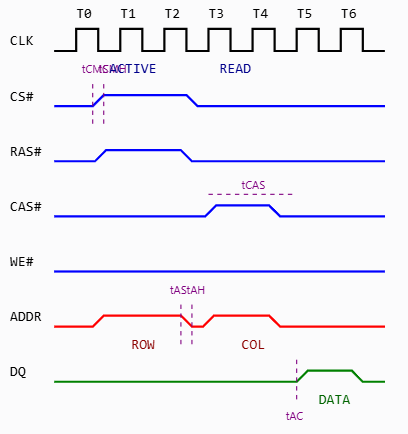

The Magic in TIMING

- Command Setup/Hold Times (tCMS/tCMH):

- Control signals (CS#, RAS#, CAS#, WE#) must be stable before the clock edge (setup)

- And remain stable after the clock edge (hold)

- These times are typically 1.5ns setup and 1.0ns hold in our IS42VM16400K model

- Address Setup/Hold Times (tAS/tAH):

- Address signals must be valid before the clock edge

- And remain valid after the clock edge

- Similar to command timing: 1.5ns setup, 1.0ns hold

- CAS Latency (tCAS):

- The delay between READ command and data availability

- Can be configured as 2 or 3 clock cycles in our model

- Set through the Mode Register during initialization

- Access Time (tAC):

- Time from clock edge to valid data during reads

- Varies based on CAS latency setting

- For CL=3: tAC3 = 5.5ns in our model

- Clock Considerations:

- All timing is referenced to the rising edge of the clock

- The clock itself must maintain minimum high and low times (tCH/tCL)

- Our testbench runs at 50MHz (20ns period)

In my UVM testbench, these timings are verified by the Memory Model through:

// From IS42VM16400K.v timing checks

$setuphold(posedge clk, csb, tCMS, tCMH); // Command timing

$setuphold(posedge clk, addr, tAS, tAH); // Address timing

$setuphold(posedge clk, dqm, tCMS, tCMH); // Data mask timing